ACE-Step 1.5: Pushing the Boundaries of Open-Source Music Generation

📝 Abstract

🚀 We present ACE-Step v1.5, a highly efficient open-source music foundation model that brings commercial-grade generation to consumer hardware. On commonly used evaluation metrics, ACE-Step v1.5 achieves quality beyond most commercial music models while remaining extremely fast—under 2 seconds per full song on an A100 and under 10 seconds on an RTX 3090. The model runs locally with less than 4GB of VRAM, and supports lightweight personalization: users can train a LoRA from just a few songs to capture their own style.

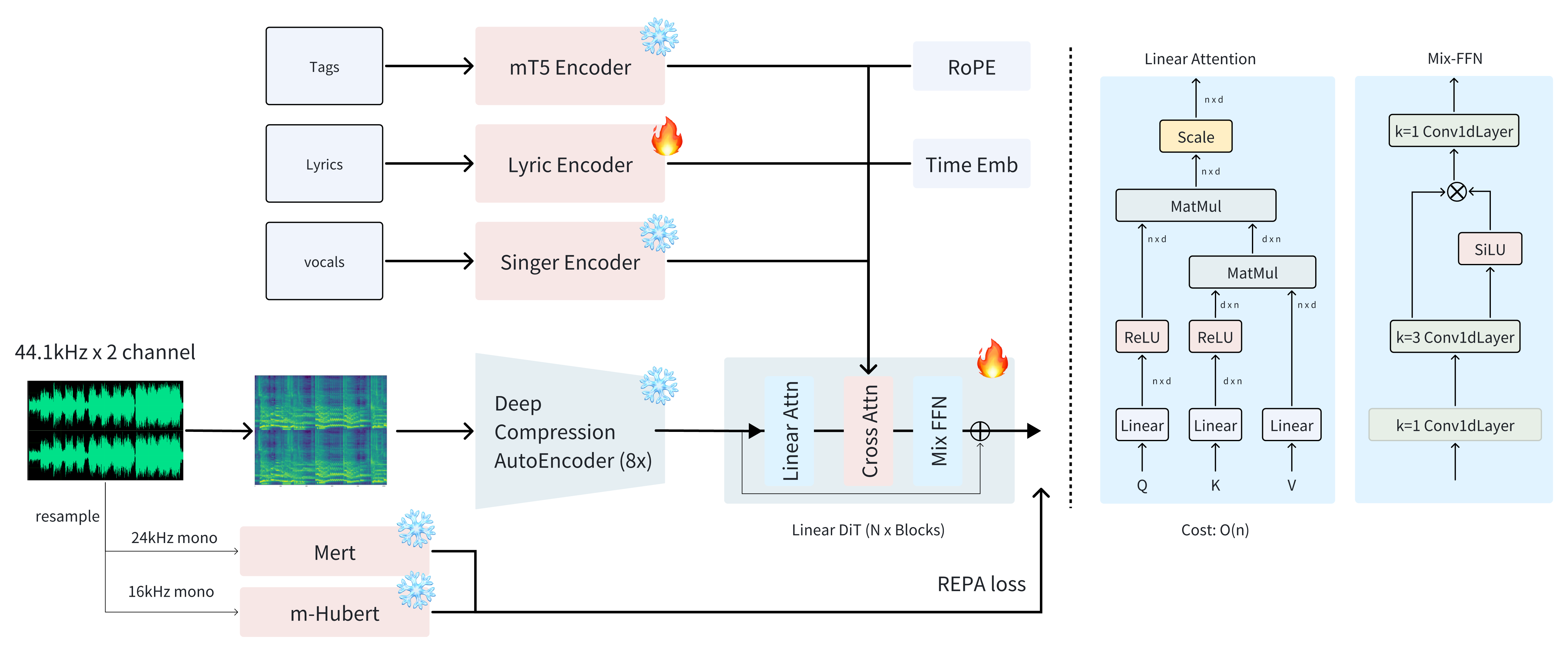

🌉 At its core lies a novel hybrid architecture where the Language Model (LM) functions as an omni-capable planner: it transforms simple user queries into comprehensive song blueprints—scaling from short loops to 10-minute compositions—while synthesizing metadata, lyrics, and captions via Chain-of-Thought to guide the Diffusion Transformer (DiT). ⚡ Uniquely, this alignment is achieved through intrinsic reinforcement learning relying solely on the model's internal mechanisms, thereby eliminating the biases inherent in external reward models or human preferences. 🎚️

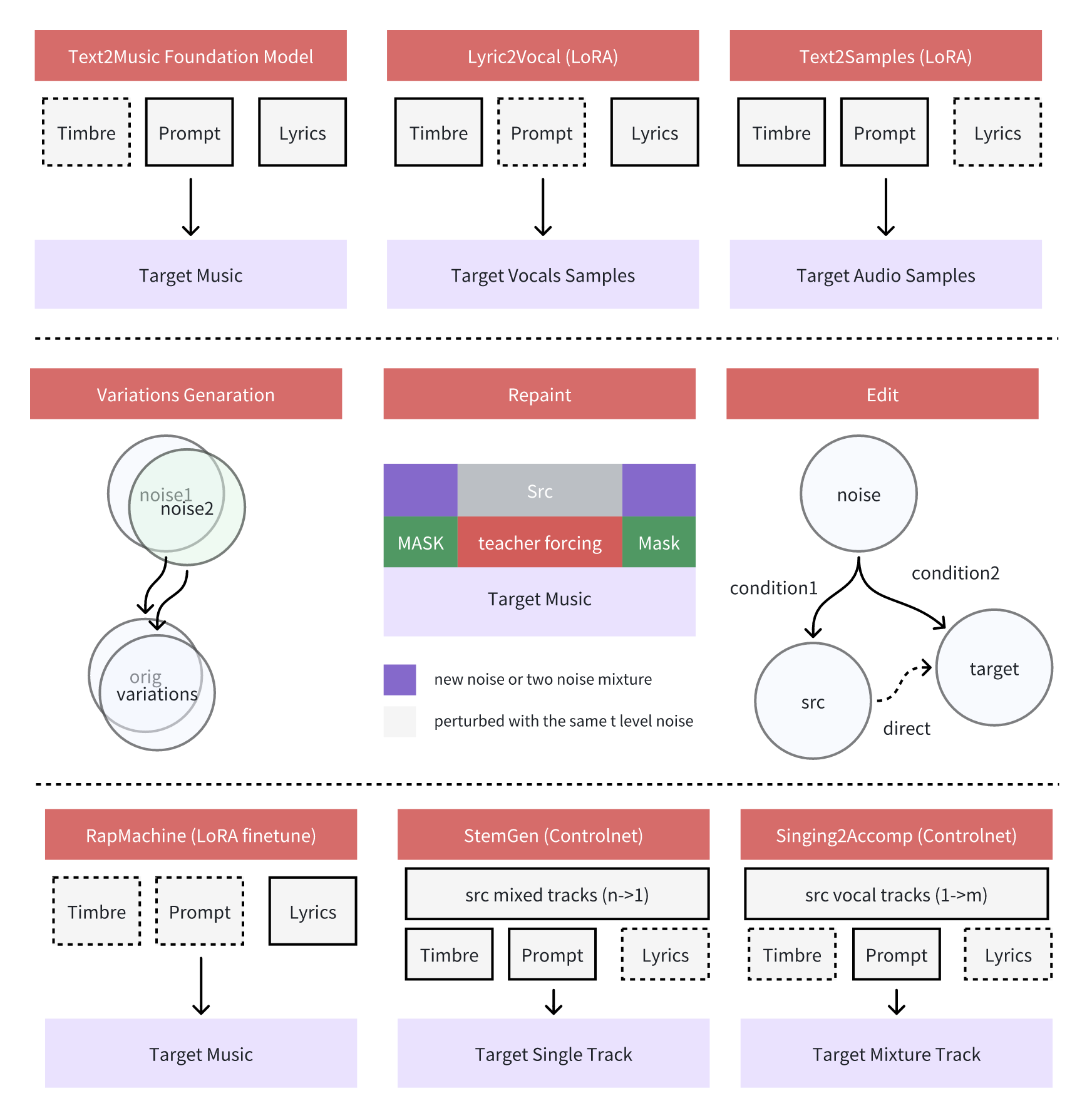

🔮 Beyond standard synthesis, ACE-Step v1.5 unifies precise stylistic control with versatile editing capabilities—such as cover generation, repainting, and vocal-to-BGM conversion—while maintaining strict adherence to prompts across 50+ languages. This paves the way for powerful tools that seamlessly integrate into the creative workflows of music artists, producers, and content creators. 🎸

| Model | AudioBox ↑ | SongEval ↑ | Style Align ↑ |

Lyric Align ↑ |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| CE | CU | PC | PQ | Coh. | Mus. | Mem. | Cla. | Nat. | |||

| Udio-v1.5 | 7.45 | 7.65 | 6.15 | 8.03 | 4.15 | 3.96 | 4.09 | 3.93 | 3.86 | 34.9 | 24.8 |

| Suno-v4.5 | 7.63 | 7.85 | 6.22 | 8.25 | 4.64 | 4.51 | 4.63 | 4.53 | 4.49 | 40.5 | 32.7 |

| Suno-v5 | 7.69 | 7.87 | 6.51 | 8.29 | 4.72 | 4.62 | 4.71 | 4.63 | 4.56 | 46.8 | 34.2 |

| Mureka-V7.6 | 7.44 | 7.71 | 6.35 | 8.13 | 4.43 | 4.29 | 4.35 | 4.29 | 4.21 | 36.2 | 22.4 |

| MinMax-2.0 | 7.71 | 7.95 | 6.42 | 8.38 | 4.61 | 4.51 | 4.59 | 4.50 | 4.41 | 43.1 | 29.5 |

| Yue | 6.58 | 7.29 | 4.95 | 7.39 | 3.01 | 2.80 | 2.85 | 2.79 | 2.82 | 26.8 | −4.6 |

| ACE-Step 1.0 | 7.22 | 7.52 | 6.50 | 7.76 | 3.99 | 3.73 | 3.85 | 3.78 | 3.68 | 28.5 | 0.9 |

| LeVo | 7.61 | 7.78 | 5.92 | 8.31 | 3.55 | 3.35 | 3.32 | 3.31 | 3.20 | 29.4 | −1.2 |

| DiffRhythm 2 | 7.25 | 7.61 | 6.33 | 7.99 | 3.99 | 3.79 | 3.97 | 3.82 | 3.66 | 32.1 | 3.8 |

| HeartMuLa | 7.66 | 7.89 | 6.15 | 8.25 | 4.68 | 4.55 | 4.69 | 4.55 | 4.45 | 31.7 | 28.6 |

| ACE-Step 1.5 | 7.42 | 8.09 | 6.47 | 8.35 | 4.72 | 4.67 | 4.72 | 4.66 | 4.59 | 39.1 | 26.3 |

Table 1: Comparison with commercial (top) and open-source (bottom) music generation models. Bold = best, underline = second best. ↑ higher is better.

🎵 Examples

| Caption | Lyrics | ACE-Step generated |

|---|

🏗️ Framework & Application