ACE-Step: A Step Towards Music Generation Foundation Model

📝 Abstract

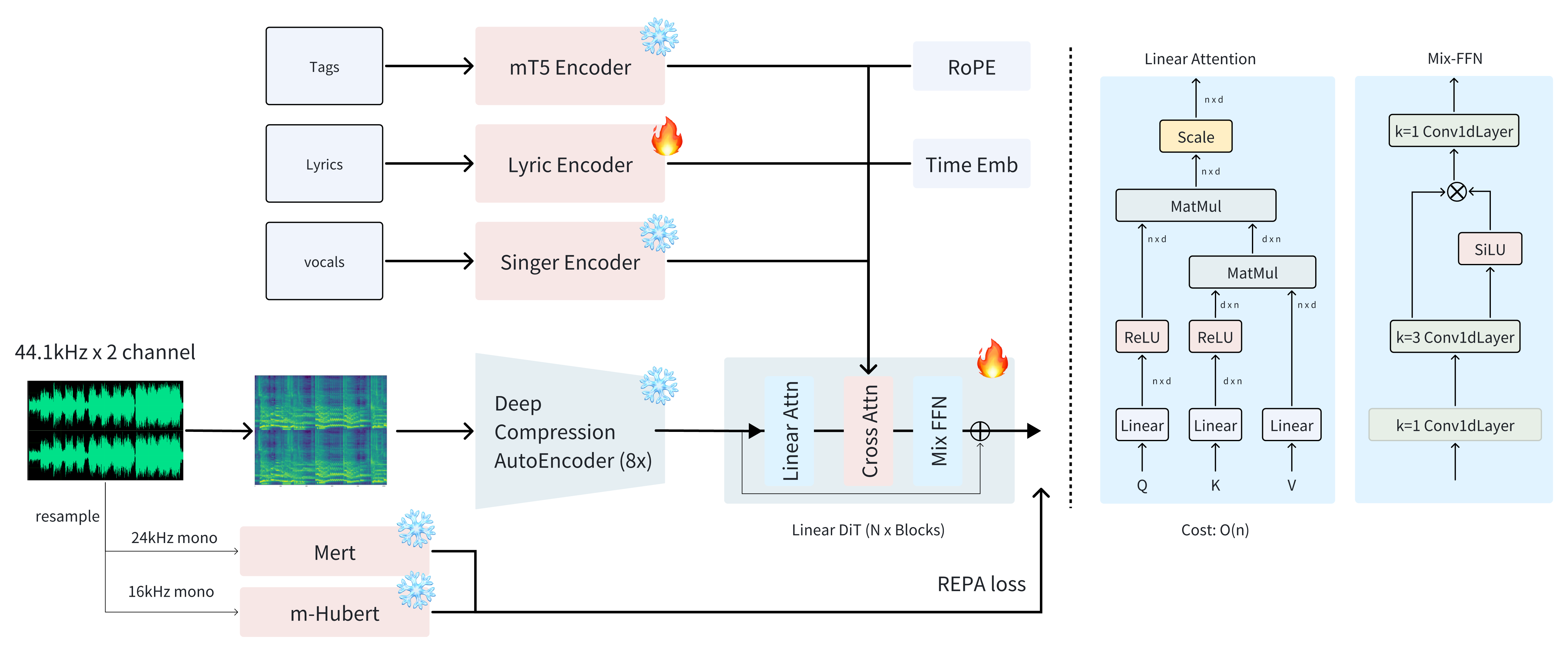

🚀 We introduce ACE-Step, a novel open-source foundation model for music generation that overcomes key limitations of existing approaches and achieves state-of-the-art performance through a holistic architectural design. Current methods face inherent trade-offs between generation speed, musical coherence, and controllability. For instance, LLM-based models (e.g., Yue, SongGen) excel at lyric alignment but suffer from slow inference and structural artifacts. Diffusion models (e.g., DiffRhythm), on the other hand, enable faster synthesis but often lack long-range structural coherence. 🎼

🌉 ACE-Step bridges this gap by integrating diffusion-based generation with Sana's Deep Compression AutoEncoder (DCAE) and a lightweight linear transformer. It further leverages MERT and m-hubert to align semantic representations (REPA) during training, enabling rapid convergence. As a result, our model synthesizes up to 4 minutes of music in just 20 seconds on an A100 GPU—15× faster than LLM-based baselines—while achieving superior musical coherence and lyric alignment across melody, harmony, and rhythm metrics. ⚡ Moreover, ACE-Step preserves fine-grained acoustic details, enabling advanced control mechanisms such as voice cloning, lyric editing, remixing, and track generation (e.g., lyric2vocal, singing2accompaniment). 🎚️

🔮 Rather than building yet another end-to-end text-to-music pipeline, our vision is to establish a foundation model for music AI: a fast, general-purpose, efficient yet flexible architecture that makes it easy to train sub-tasks on top of it. This paves the way for developing powerful tools that seamlessly integrate into the creative workflows of music artists, producers, and content creators. In short, we aim to build the Stable Diffusion moment for music. 🎸

📋 Table of contents

🎯 Baseline Quality

📝 Note:

🎵 Lyrics are random picked from AI music generation community or internet and not in our training set.

📏 Existing models either lack length control (LLMs) or are fixed-length (diffusion). We enable flexible length for practical music composition.

💻 B.T.W., the project page is vibe coded by Roocode. 😊

| Prompt | Lyrics | ACE-Step generated |

|---|

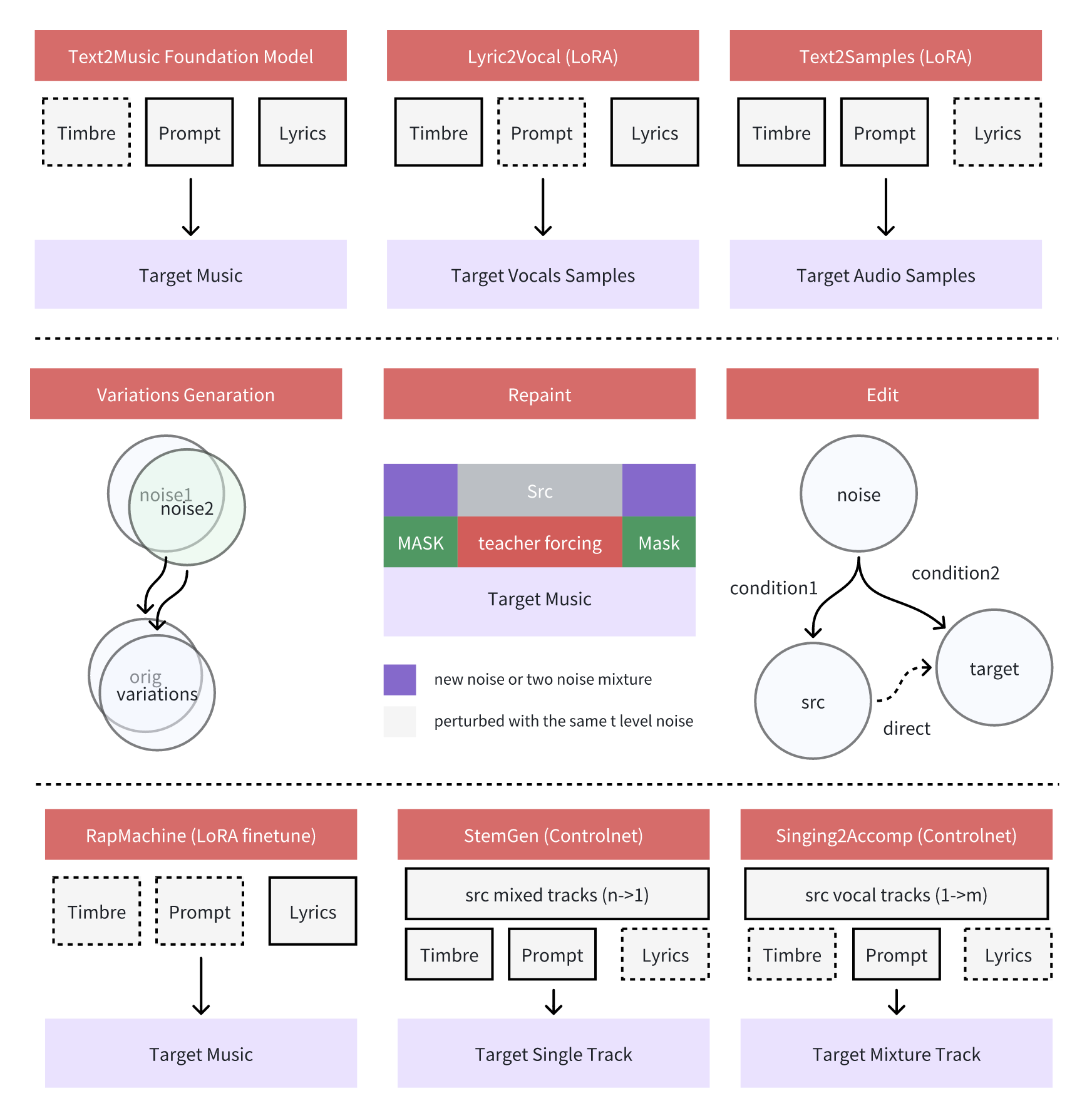

🎛️ Controlability

📝 Note:

The model supports various training-free applicaitons.

- retake: regenerate a variation of the same song.

- repaint: regenerate a specific part of the song.

- edit: modify the lyrics of the song.

| Prompt | Lyrics | ACE-Step generated |

|---|

💡 Application

📝 Note:

🎵 The model supports various fine-tuned applications

- Lyric2Vocal: lora finetune on pure vocals

- Text2Samples: lora finetune on musical samples & loops

- RapMachine: lora finetune on rap data

| Prompt | Lyrics | ACE-Step generated |

|---|

⚠️ Limitations & Future Improvements 🔮

- 🎲 Output Inconsistency: Highly sensitive to random seeds and input duration, leading to varied "gacha-style" results.

- 🎵 Style-specific Weaknesses: Underperforms on certain genres (e.g. Chinese rap/zh_rap) Limited style adherence and musicality ceiling

- 🔄 Continuity Artifacts: Unnatural transitions in repainting/extend operations

- 🎤 Vocal Quality: Coarse vocal synthesis lacking nuance

- 🎛️ Control Granularity: Needs finer-grained musical parameter control

- 🌐 Multilingual Lyrics Compliance: Improved support for lyrics in multiple languages, enhancing accuracy and naturalness.